Unlocking The Secrets of Self-Organization

How Recursion, Incursion, and Syntropy Drive Self-Reference and Order in Complex Physical Systems

🧠 A single feedback loop can stabilize billions of patterns. What if information, not energy, is the main driver of order?

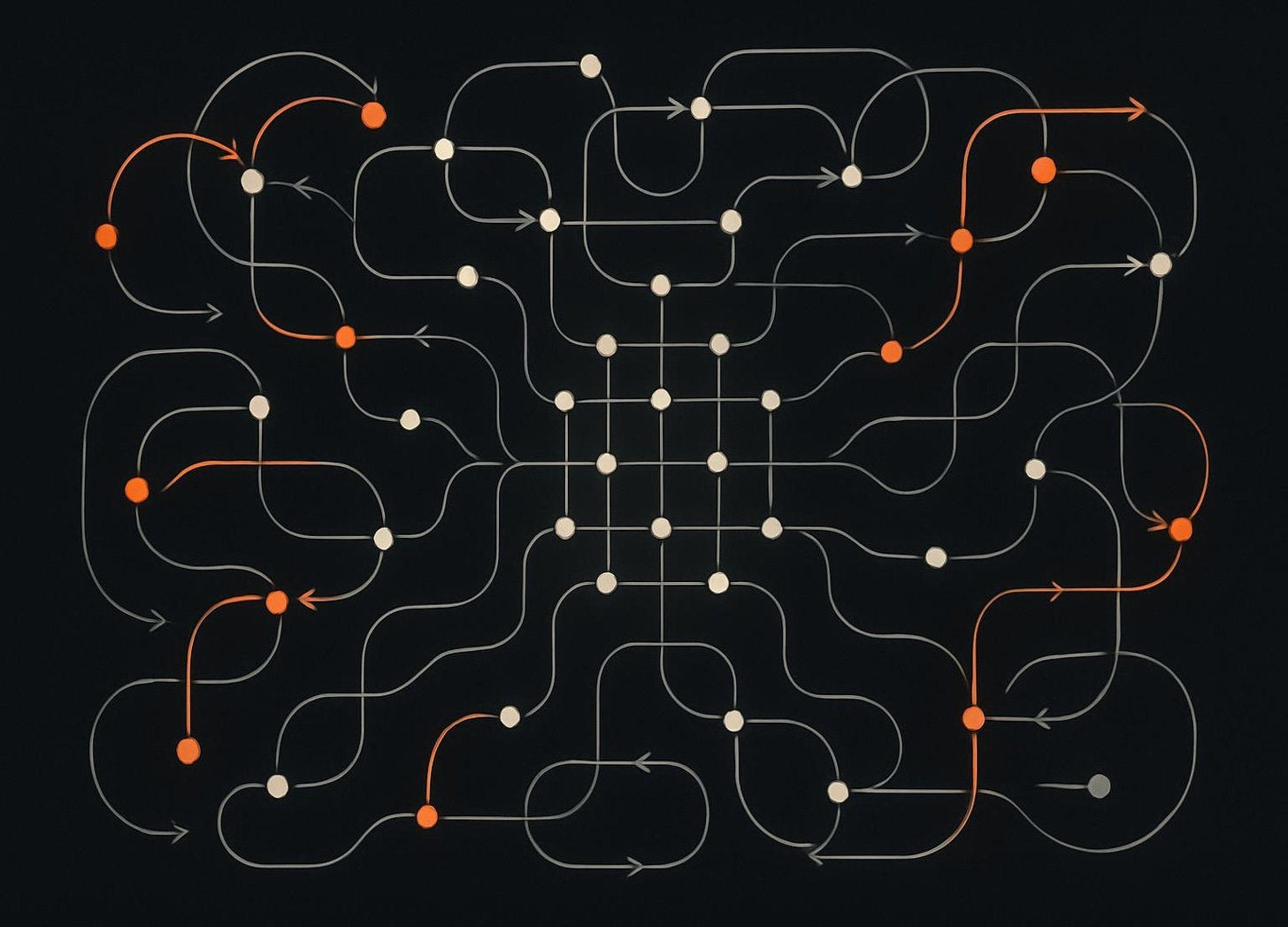

I’ve always been interested in complex physical systems showing remarkable self-organization, thinking the true engines behind their stability might hide in plain sight. Recursion and incursion drive self-reference, anticipation, and the regeneration of order from within. This isn’t about perpetual motion but about how internal informational feedback—not just external energy—can restore coherence and keep patterns alive.

Understanding syntropy—the tendency for systems to self-organize through internal feedback—opens new doors for engineering, physics, and even consciousness research. These principles blend first-principles mechanics with real-world adaptability, offering insights into how systems resist chaos and regenerate structure in the face of disruption.

👉 Subscribe to Advanced Rediscovery. I’m sharing what I’m finding as I decode zero point energy, the quantum vacuum, and extended electromagnetism — 12+ years deep in 2000+ papers and counting. Weekly briefings from my home lab to your inbox.

In today’s briefing

🔍 Recursion and incursion create self-reference and anticipation in physical systems.

⚡ Syntropy describes order restored from within, not just by outside energy.

🧲 Nonequilibrium systems stabilize patterns through feedback and structured flows.

🤔 Teleonomic goals emerge from fixed points in anticipatory models.

🧩 Adaptive selection lets systems tune between stability and exploration.

Self-Reference, Recursion, and Syntropy in Complex System Dynamics

Self-organizing systems often display recursion and incursion as their core operating principles. These mechanisms let patterns regenerate and persist, even when the environment is noisy or unpredictable.

Syntropy describes how systems maintain order from within, using internal informational feedback rather than relying on a steady stream of outside energy. This internal causation is what makes complex systems robust and adaptive.

Recursion and incursion use feedback and anticipation to regenerate patterns and restore order in complex systems.

I’ve recently researched recursive systems that use their own past and present states to update themselves. In physical systems, this might look like an oscillator that repeats its cycle, or a chemical reaction that regenerates its own catalysts. Incursion takes it further by allowing the system to incorporate anticipated future states into its updates, so the system doesn’t just react but anticipates and adapts.

Hyperincursive models, as described by Dubois, create multiple possible futures at each step. The system then needs a way to select which path to realize, either through internal rules or environmental feedback. This branching is a hallmark of adaptive, resilient systems. Anticipatory memory becomes a way to store these potential futures, giving the system a repertoire of responses to draw from.

Syntropy reframes self-organization as an internal process. Instead of relying on constant external energy input, open systems can regenerate coherence through feedback loops. This is seen in electromagnetic field configurations, biological pattern formation, and even in some engineered systems. The result is dynamic equilibrium, where order is continuously restored despite ongoing disturbances.

Anticipatory memory stores potential futures and guides present actions, closing an informational loop between foresight and realized outcomes.

The interplay of recursion, incursion, and syntropy offers new ways to understand and engineer self-sustaining systems. By focusing on internal informational feedback, it’s possible to design mechanisms that resist chaos, adapt to change, and maintain coherence across scales.

Why it matters

🧠 Recursion and incursion enable self-reference and anticipation.

⚡ Syntropy supports order via internal feedback.

🧩 Adaptive selection guides stable pattern regeneration.

References [1–5]

Mechanisms of Self-Organization and Stability in Nonequilibrium Steady States

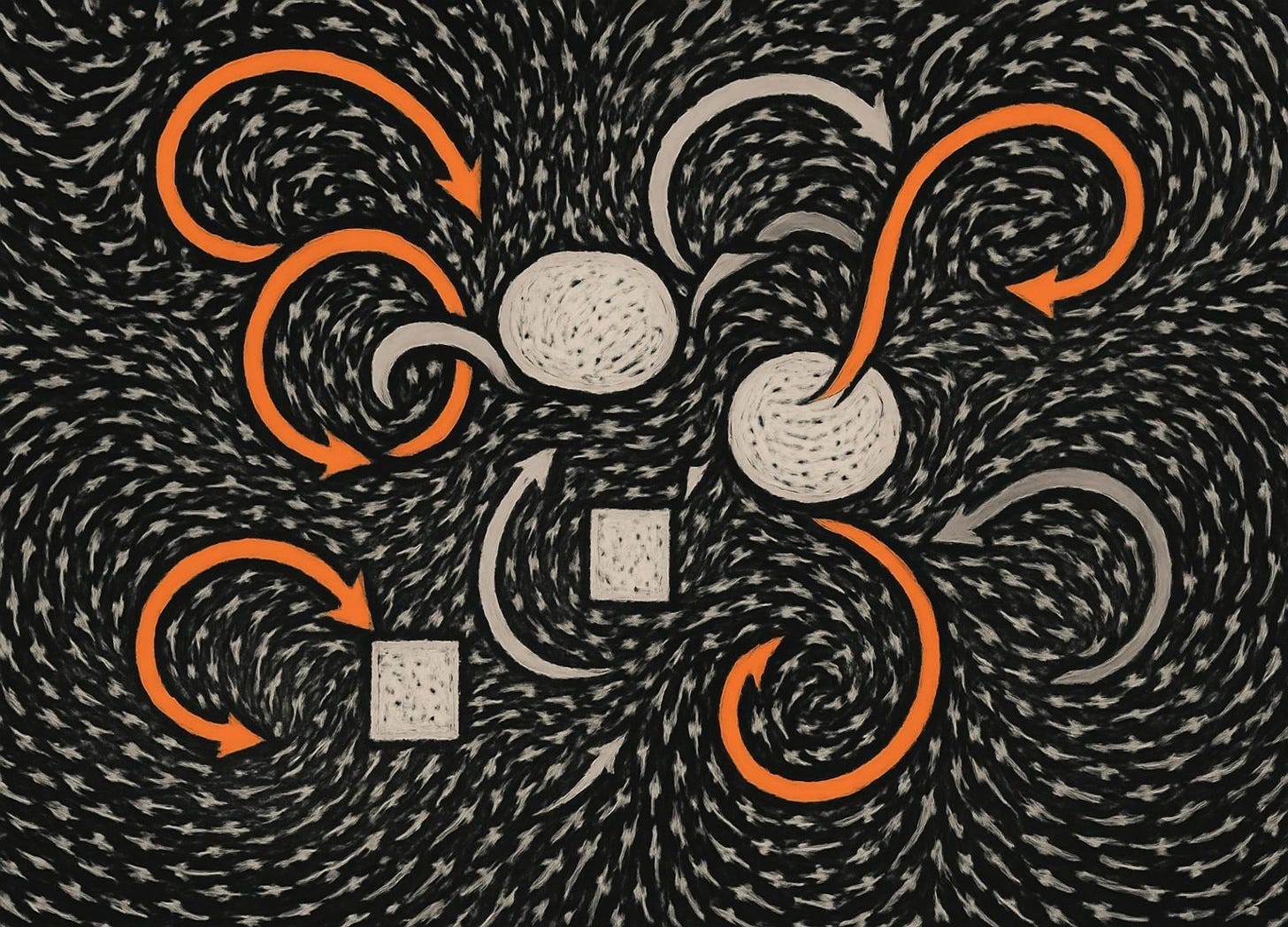

Nonequilibrium steady states show that order can persist even when systems are open and constantly exchanging energy with their environment. These states rely on feedback, structured flows, and ongoing replenishment to stabilize patterns.

Negative entropy production and self-oscillation are two mechanisms that let these systems absorb disturbances, regenerate order, and resist decay without needing isolation or perfect symmetry.

Nonequilibrium steady states use continuous feedback and structured energy flows to maintain order under constant disturbance.

Open systems driven far from equilibrium can self-organize into low-entropy structures. This happens when environmental gradients and broken symmetries channel energy into organized flows. Instead of decaying into randomness, the system uses feedback to maintain coherent patterns.

Negative entropy production means that part of the input energy is used to build or maintain order, not just produce heat. Structured potentials and phase relations in electromagnetic or biological systems help these patterns persist even under constant disturbance. Self-oscillation and rhythmic processes act as internal clocks, continually regenerating order.

The environment isn’t just a source of noise: By regulating the exchange of energy and information, systems can achieve dynamic equilibrium, where order is maintained not by storing energy, but by managing flows. This principle shows up in everything from lasers to living cells and engineered devices that mimic natural resilience.

Self-ordering is stabilized by ongoing replenishment of ordered motion from the drive, allowing perturbations to be damped or redirected without loss of coherence.

Self-organization in nonequilibrium steady states is less about resisting change and more about harnessing it. By managing flows and feedback, systems can stay ordered, robust, and adaptable even under constant pressure.

Why it matters

🧲 Open systems use feedback to stabilize order.

⚡ Negative entropy production supports persistent patterns.

🧠 Structured flows and self-oscillation regenerate coherence.

References [2–6, 8–10]

Final Thoughts

Recursion and incursion show us that internal feedback can stabilize and regenerate patterns, even when external conditions are unpredictable. The assumption is that systems with anticipatory informational feedback can maintain coherence without constant external correction. Evidence from incursive models, nonequilibrium steady states, and anticipatory memory supports this view. Open questions remain about the limits and scalability of these dynamics.

If syntropy truly describes a universal tendency for systems to restore order through informational loops, then the boundaries between physics, computation, and biology blur. Hypotheses about adaptive selection, dynamic teleonomy, and self-sustaining order suggest new ways to build resilient technologies and interpret natural phenomena.

What if the Universe itself, as some propose, is a vast anticipatory system seeking coherence through internal causation?

Quick Recap

🧠 Recursion and incursion drive self-reference and anticipation.

⚡ Syntropy enables order via internal informational feedback.

🧲 Nonequilibrium systems stabilize patterns through structured flows.

🤔 Teleonomic goals and adaptive selection support dynamic stability.

💡 Test these principles in your own field. Challenge the idea that only external energy keeps systems stable. Share your results and spark debate!

Glossary

Recursion: A process where a system updates itself based on its own previous states, creating self-reference.

Incursion: A generalization of recursion where a system’s update includes its own anticipated future state, enabling internal causation.

Syntropy: The tendency of open systems to restore or maintain order through internal informational feedback, counteracting entropy.

Teleonomy: Goal-directedness in systems, where fixed points or objectives are embedded in the system’s own dynamics.

Anticipatory Memory: A mechanism by which systems store and use information about potential futures to guide present actions.

Nonequilibrium Steady State: A stable state in open systems maintained by continuous energy flow and regulated exchange with the environment.

Negative Entropy: A measure of order or information gained in a system, often associated with self-organization and pattern formation.

Hyperincursion: A form of incursion where multiple potential futures are generated at each step, requiring selection mechanisms.

Feedforward Loop: A control mechanism where predicted future conditions influence present states, contrasting with feedback control.

Dynamic Equilibrium: A state where ongoing processes balance each other, allowing systems to maintain order amid constant change.

Sources & References

Dubois DM. Introduction to Computing Anticipatory Systems. 1998.

Bearden TE. Annotated Glossary. 2000.

Moore K; Stockton M; Bearden TE. Electromagnetic Energy from the Vacuum – System Efficiency (ε) and Coefficient of Performance (COP) of Symmetric and Asymmetric Maxwellian Systems. 2006.

Bearden TE. Oblivion – America on the Brink. 2005.

Bearden TE. The Motionless Electromagnetic Generator – Extracting Energy from a Permanent Magnet with Energy-Replenishing from the Active Vacuum. 2018.

Bearden TE. Excalibur Briefing – Explaining Paranormal Phenomena. 1988.

Montalk T. Fringe Knowledge for Beginners. 2008.

Bearden TE. EM Energy from the Vacuum – Ten Questions with Extended Answers. 2000.

Bearden TE. Dark Energy or Dark Matter. 2000.

Kelly PJ. Practical Guide to ‘Free Energy’ Devices. 2020.

🧑🏼 Follow @drxwilhelm on X Twitter, Substack, Medium, TikTok, YouTube

Really helpful piece. Here is how I read your terms, with wording tweaks from my perspective.

Incursion: rather than anticipation of future states, it is an implicit, self consistent update. The present step contains the would-be next state and determines it now as conditions unfold. It shapes the route the system takes, not a forecast layered on top.

Hyperincursion: sometimes that implicit rule yields several admissible next steps. The actual path is fixed by the system’s own constraints, continuity, and ambient fluctuations. It is branchable math resolved by the structure of the system.

Syntropy: coherence relative to the background. You can speak about syntropy for any system, but only open systems can sustain it by exchanging with their background. A truly closed system can show it transiently and then it relaxes.

“Anticipatory memory”: what matters is the ever changing present state that guides the next step. If some of that guidance persists after the event, that persistence is what I would call memory. Before that, it is simply the current guiding state, continuously updated.

What I mean by vacuum: a laboratory vacuum and space vacuum are not the same environment, but in either case the vacuum baseline is global. Time varying boundaries can lift fluctuations into photons (light), yet there is no local DC gradient to tap. Matter can provide DC via stored gradients or AC via cycles; any electricity you see after detection comes from the material side.

Bottom line: the framework reads as self consistent, path shaping dynamics. Incursion determines the next step in real time. Hyperincursion is branchable evolution narrowed by constraints. Syntropy is coherence maintained by exchange with the background. The vacuum can be a light source, not a power source. Matter supplies the gradients that make DC or AC possible.